When I was a Software Quality Engineer, I can’t tell you how often I’d explain what testing we [were doing / had done / would do], and all around me people’s eyes would glaze over. Developers, PMs, EMs, etc. — people who I knew were invested in the quality of our product, and yet they weren’t interested in the details of our testing.1To be fair, my eyes usually glazed over for a lot of their updates, too. I could write a whole other version of this post about how nobody cares about code, either. ;)

Being the scientific person that I am, I tried a variety of approaches to get them more engaged. I explained it in different ways, both more abstract and more targeted. I tried smaller audiences or bigger audiences, I tried written documents and discussions during meetings. Some worked better than others (and some day I’d love to tell you all about what I learned about communicating with my peers!), but I never achieved my goal: consistently getting people to care about testing.

For a while, I thought it was a problem — I’m passionate about testing and I find it endlessly interesting to anticipate what kinds of things our users will do to make it break or what will happen when millions of users all use the feature at the same time. (What happens when hundreds of millions of desktop clients all try to ping home to our servers to check for updates at the same time? Best bet: our servers fall over!) I’ve always had a few coworkers who were just as geeky as I am about testing, and we enjoyed digging into the details together. So the other people, who weren’t interested, there must be something wrong with them, right? They must not care as much about testing as I do, right?

But the more I looked at the evidence, the more I realized they shouldn’t care about testing…and neither should I!

You shouldn’t care about testing

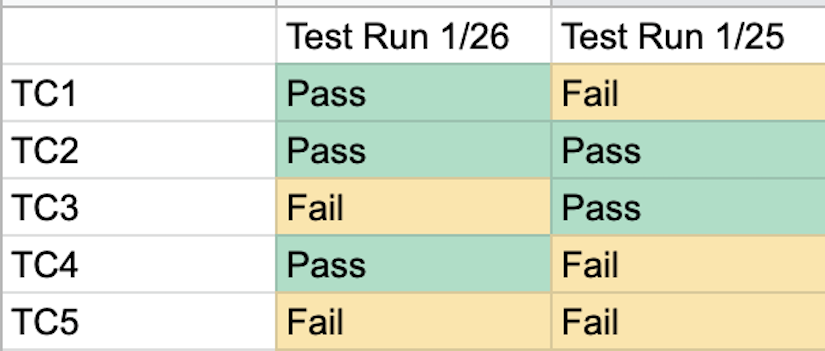

When we talk about testing, often we talk about which test cases we have or haven’t run and whether they passed or failed. But this isn’t useful information without a much broader context.

One time, I asked a PM to review the tests I had planned for a feature. He skimmed them, but it was a big long list of scenarios with both too much detail and not enough detail. He couldn’t give meaningful feedback about whether they were the right tests, because he couldn’t quickly tell whether they covered all the risky parts of the feature. Because of how brains work, figuring out if there are gaps in testing from a list of more than about 6 tests is virtually impossible.2I developed a strategy for how to get feedback about gaps in testing without asking them to have their eyes glaze over staring at a long list of test cases, which I call reviewing testing variables (catchy, right?). I’ll write a post about that sometime.

Another example was when we would report status for massive, cross-functional software projects, summarized for our directors and VPs. The status report would include a table somehow summarizing testing, but it was a challenge to figure out what to say that was brief & meaningful. QEs might write, “100% of testing complete”, but that wouldn’t tell you whether they passed or failed, or how much testing we’ll have to re-run after blocking bugs are fixed. We could show a graph of the bug find/fix trend, and that might tell you that the trend is we’re finding fewer and fewer bugs, so probably quality is improving … or it might just indicate that all the QEs were pulled off the project, so no testing is being done and the feature is still a hell-scape for users.

You should care about impact

Any discussion of testing needs to be in the context of what users are truly likely to do, and about which parts of the software system are fragile (likely to fail in a spectacular way), vs which parts are robust (likely to be resilient or failures are easy to recover from).

Back to that original scenario, when I was in a meeting full of my coworkers who I knew cared deeply about quality. What I did instead was this: I started talking about the impact of the testing. “We found this serious blocking bug, and fixing it will push back our next release date by a week.” Or, “We tested across all browsers, and have confidence that we can ship to all of our customers on time.”

When I framed it in terms of impact, everyone paid attention. They’d ask cogent questions, like for more details about the bug, or whether we have confidence there aren’t other bugs like it. They’d start brainstorming ways to cut features or cut work in order to still hit the original date. They engaged with the state of quality… because I framed it in a way that mattered to them.

This meant I had to do my homework about the impact of my test results before reporting on the testing! I had to review the bugs with the responsible triage-ers (PM, lead dev, etc.), to make sure we knew, quickly, which ones were blockers and which weren’t, and determine whether fixing the blockers would be easy or hard. Then, when we got to the meeting, I could report on the impact.

And what should we have put in those executive status summaries? We settled on stoplight colors! Green meant everything was on track from a testing perspective. Yellow meant there were concerns but we had a plan to mitigate them. Red meant something was bad that might impact the schedule and we don’t have a solution yet. 3For accessibility, I recommend using unique shapes as well as colors. E.g. green heart, yellow warning sign, red x. (I meant to include example emoji, but my website won’t show them today. Once a QA Engineer, always a QA Engineer?) We’d give a brief description of what that meant, and a link to a longer document in case you wanted to see the details like what tests were run, where they’d be embedded alongside what the risks are and what quality metrics we’re watching.

Does this resonate for you, or do you have a different experience? I’d love to hear what’s worked for you.

Footnotes

- 1To be fair, my eyes usually glazed over for a lot of their updates, too. I could write a whole other version of this post about how nobody cares about code, either. ;)

- 2I developed a strategy for how to get feedback about gaps in testing without asking them to have their eyes glaze over staring at a long list of test cases, which I call reviewing testing variables (catchy, right?). I’ll write a post about that sometime.

- 3For accessibility, I recommend using unique shapes as well as colors. E.g. green heart, yellow warning sign, red x. (I meant to include example emoji, but my website won’t show them today. Once a QA Engineer, always a QA Engineer?)